Problem

People often have difficult times navigating a place they have never visited before, for instance, a hospital. As a person usually carries a mobile device equipped with cameras and inertial measurement units (IMUs), can data crowdsourced from these mobile devices be used collectively for solving the situation of getting lost?

Method

With the crowdsourced visual and inertial data, create a map of the area, track the motion of a user, and ultimately provide a planned path to a user.

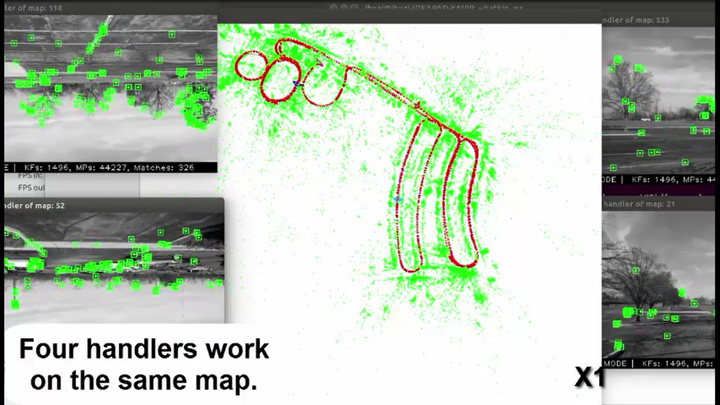

To create a collective map and estimate a user's location in the map, a client-server architecture is designed in which a client running on a user's device estimates its incremental motion with the sensor data and the server constructs a map of keyframes and landmarks using the collective information emitted by the clients. A handler on the server is responsible for handling data from one client.

Key results

The architecture is realized in two prototypes based on the ORB-SLAM2 program, one for dealing with monocular camera frames, the other for monocular camera frames and IMU data. Both prototype systems were tested with multi-session data collected by mobile devices, demonstrating map re-use, robust loop closure, and real time accurate collaborative mapping.

Related papers: